ITP Camp starts today and I'm totally stoked for a month-long marathon of geeky goodness. My project will be focusing on meta-visualzation, probably using Processing, but who knows? I think it would be cool for Open Data Wiki to have a widget that shows the types of visualizations that work well with the types of data shown, or maybe an collaborative interface for categorizing information, like a simple virtual card-sort exercise.

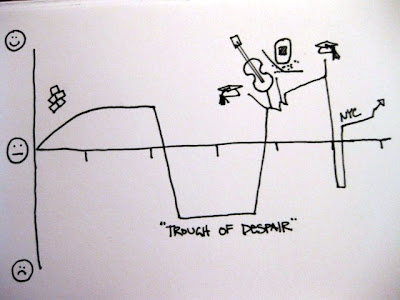

June is a red-letter month for BitsyBot Labs. I moved into my space at SoHo Haven this morning. It's so good to have a working space without feline or televised distractions.

6.01.2010

5.31.2010

James Squire Guide to Beer

I love the way visualizations simplify decisionmaking. On a recent trip to Sydney I had dinner at James Squire, and when deciding which of their signature beers to pair with my meal, the following chart made my task a lot easier:

It brought to mind this lovely number that was on display last year at Bobby Van's Steakhouse in Manhattan:

I find so much power in a simple two-axis matrix for choosing within a set of products. I'd love to see more services do this type of presentation to help consumers navigate the sometimes overwhelming choices that they are faced with. I've been thinking these types of presentations in the context of The Paradox of Choice by Barry Schwartz and Nudge by Thaler and Sunstein. Does this type of visualization make increasingly complex decisions easier to navigate? I tend to think so. I like an aromatic beer, with savory undertones, so I should choose an ale rather than a lager. Rather than struggling to identify the nuances between the myriad of beer choices, I'm given a useful map. What other types of choices can be simplified by a little visual design? I'd wager the categories are endless. It highlights to me the importance of empathy in design. If the first mantra of the information designer is to know the purpose of your data, the second may be to respect your audience. Be aware that as a designer you're steering them to a product, you may as well steer them to the product they're going to like the most.

5.24.2010

Any sufficiently advanced technology...

...is indistinguishable from magic.

Bintsley recently acquired a magic wand (courtesy of cdibona). It requires a bit of practice, but it adds a skill-building aspect to lounging on the couch. Programming the wand wasn't nearly as tedious as I'd thought it would be.

The tactile cues are fascinating. It makes me think about how hardware-software interactions give the opportunity for richer feedback, but are seldom used. My guess is it's due to the difficulty of deciphering physical codes. We are a primarily visual species. Arrows mean pretty much the same thing in nearly all human cultures, but the difference between two pulses versus one pulse of vibration must be learned.

Bintsley recently acquired a magic wand (courtesy of cdibona). It requires a bit of practice, but it adds a skill-building aspect to lounging on the couch. Programming the wand wasn't nearly as tedious as I'd thought it would be.

The tactile cues are fascinating. It makes me think about how hardware-software interactions give the opportunity for richer feedback, but are seldom used. My guess is it's due to the difficulty of deciphering physical codes. We are a primarily visual species. Arrows mean pretty much the same thing in nearly all human cultures, but the difference between two pulses versus one pulse of vibration must be learned.

3.31.2010

Transparency Camp

Transparency Camp was great! I facilitated a session on intellectual accessibility and data visualization. The conversation started with the image below:

We continued our discussion for with a meta visualization, laying out the types of data visualizations that are currently out there on a scatter plot with continuum axes. I tend to think about info vis like this, as a matrix of options that fits into different families or categories.

Something really interesting that came out of the conversation was the idea to establish a 'Visualizer Code of Ethics'. It was clear from our discussion that Information Designers such as myself can feel just as much pressure to massage our visualizations as do data analysts and statisticians. I think the challenge in a code of ethics is that a visualization relies on the story or message of the analysis. I've become increasingly conflicted regarding the use of pyramid and 3-D pie charts. I've always loathed their use, but what's the point of a graphic? If you think about the continuum of visualization methods above, statistical graphics are one small part of a universe of visual communication techniques. I've seen people painstakingly massage venn diagrams into the appropriate area relationships to represent percentages, and I have to ask, what's the point? As a concept graphic, a venn's purpose is to demonstrate intellectual relationship. If you're trying to show precise proportional relationship, there still isn't anything better than a bar chart. (Linear differences are so much easier to see than area differences.)

Maybe a good place to start with an visualization code of ethics is "know the purpose of your data".

3.11.2010

Google Public Data Explorer

I made the chart above using the new Public Data Explorer from Google. It doesn't have the capability to upload your own data set yet, but it's still pretty nifty. As is usually the case, the way the data set is structured has a huge impact on the ease of analysis. Some of the data sets have better categorization than others, and it isn't possible to select a whole set of variables at one time, which would be nice. As tools go, it's really easy to get the html needed to embed in any website. The base visualizations are really clean, which is fantastic, but the tool doesn't give much flexibility in terms of combining seemingly unrelated data sets (like showing rate of unemployment and HIV infection on the same chart). I'm looking forward to seeing how Google will handle the data uploading issue, as well as the continued development of the data files. Nat Torkington just wrote an illuminating post on open data formatting issues. I hope that (as he suggests) open data will follow in the model of the open source community.

3.09.2010

UPA Usability Metrics Workshop

I went to a great UPA workshop last month, presented by Bill Albert and Tom Tullis. It was a jam-packed day, covering a nearly overwhelming amount of content. Tom and Bill walked us through an overview of metric types and collection methods, and then concentrated on four different types of metrics: Performance, Self-Reported, Combined, and Observational.

One thing that struck me was the relationship between these metrics as they were presented. Performance and Self-Reported metrics are both gleaned through a structured interaction with a sample set of users. Combined metrics give an overall view of the health of an interaction, and are calculated using both Performance and Self-Reported metrics. Observational metrics are used less often than Performance metrics, and are seldom used in a Combined metric format.

One thing that struck me was the relationship between these metrics as they were presented. Performance and Self-Reported metrics are both gleaned through a structured interaction with a sample set of users. Combined metrics give an overall view of the health of an interaction, and are calculated using both Performance and Self-Reported metrics. Observational metrics are used less often than Performance metrics, and are seldom used in a Combined metric format.

Given that I've been working within a traditional survey-based market research environment for the past four years, I found the combination of these different types of performance indicators very interesting, especially given the practice of using self-reported data to create derived KPI (key performance indicators). It struck me that our rapidly changing access to data has the capacity to completely overhaul the way we approach measuring the success or failure of a product. Ultimately we must be able to demonstrate a profitable return on investment, but what happens when we have larger data sets to work with? Can we combine post-launch observational metrics with pre-launch performance tests to validate continuing development?

Market research and usability have their roots in the production and sale of products, not services. As we shift focus from the production of meatworld goods to the design of online services, we suddenly have the ability to approach larger and more disparate groups of consumers. With agile methodologies creating ever shorter development cycles, we have more opportunities to use our user communities as test subjects for working services. Google has famously tested 41 shades of blue for one of its toolbars, measuring click through rates to determine which shade is most appropriate. Services like Clickable provide the ability to make immediate decisions about search advertising ad copy. Users can see the real results on their pay per click online ads and use that information to refine their messaging (compare that to a six-week messaging study). Granted, this is easy to do in a low-cost medium with infinite dimensions. (When a prototype takes at least a year to develop and costs at least a million dollars to produce, the barrier to this kind of live experimentation is high). Happily, we're living in a new age now, where interfaces and services and messages are expected to shift and grow and refine with changing consumer demand.

So what does this mean for usability metrics? For virtual services we will need to find a way to integrate those traditional metrics with live Observational metrics. Heck, we could even create a way to automate this type of reporting. I've got some ideas on how to do this, but that will have to wait for another day.

One thing that struck me was the relationship between these metrics as they were presented. Performance and Self-Reported metrics are both gleaned through a structured interaction with a sample set of users. Combined metrics give an overall view of the health of an interaction, and are calculated using both Performance and Self-Reported metrics. Observational metrics are used less often than Performance metrics, and are seldom used in a Combined metric format.

One thing that struck me was the relationship between these metrics as they were presented. Performance and Self-Reported metrics are both gleaned through a structured interaction with a sample set of users. Combined metrics give an overall view of the health of an interaction, and are calculated using both Performance and Self-Reported metrics. Observational metrics are used less often than Performance metrics, and are seldom used in a Combined metric format.Given that I've been working within a traditional survey-based market research environment for the past four years, I found the combination of these different types of performance indicators very interesting, especially given the practice of using self-reported data to create derived KPI (key performance indicators). It struck me that our rapidly changing access to data has the capacity to completely overhaul the way we approach measuring the success or failure of a product. Ultimately we must be able to demonstrate a profitable return on investment, but what happens when we have larger data sets to work with? Can we combine post-launch observational metrics with pre-launch performance tests to validate continuing development?

Market research and usability have their roots in the production and sale of products, not services. As we shift focus from the production of meatworld goods to the design of online services, we suddenly have the ability to approach larger and more disparate groups of consumers. With agile methodologies creating ever shorter development cycles, we have more opportunities to use our user communities as test subjects for working services. Google has famously tested 41 shades of blue for one of its toolbars, measuring click through rates to determine which shade is most appropriate. Services like Clickable provide the ability to make immediate decisions about search advertising ad copy. Users can see the real results on their pay per click online ads and use that information to refine their messaging (compare that to a six-week messaging study). Granted, this is easy to do in a low-cost medium with infinite dimensions. (When a prototype takes at least a year to develop and costs at least a million dollars to produce, the barrier to this kind of live experimentation is high). Happily, we're living in a new age now, where interfaces and services and messages are expected to shift and grow and refine with changing consumer demand.

So what does this mean for usability metrics? For virtual services we will need to find a way to integrate those traditional metrics with live Observational metrics. Heck, we could even create a way to automate this type of reporting. I've got some ideas on how to do this, but that will have to wait for another day.

Subscribe to:

Posts (Atom)